Overview #

IRP offers failover capabilities that ensure Improvements are preserved in case of planned or unplanned downtime of IRP server.

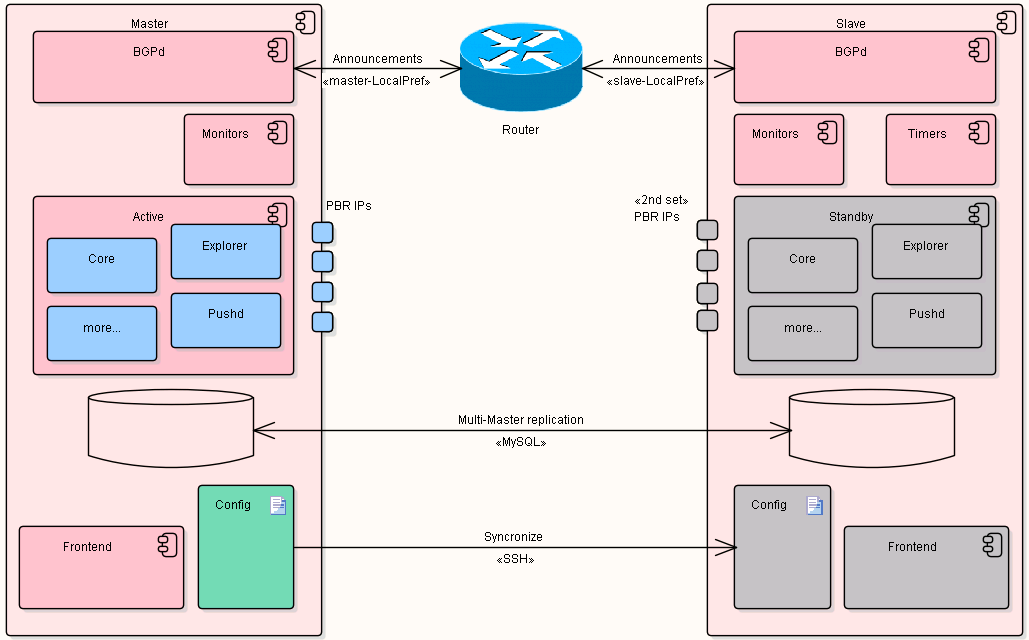

IRP’s failover feature uses a master-slave configuration. A second instance of IRP needs to be deployed in order to enable failover features. For details about failover configuration and troubleshooting refer Failover Configuration.

- slave node running same version of IRP as the master node,

- MySQL Multi-Master replication of ‘irp’ database,

- announcement of the replicated improvements with different LocalPref and/or communities by both nodes,

- monitoring by slave node of BGP announcements originating from master node based on higher precedence of master’s announced prefixes,

- activating/deactivating of slave IRP components in case of failure or resumed work by master,

- syncing master configuration to slave node.

- two IRP nodes – Master and Slave,

- grayed-out components are in stand-by mode – services are stopped or operating in limited ways. For example, the Frontend detects that it runs on the slave node and prohibits any changes to configuration while still offering access to reports, graphs or dashboards.

- configuration changes are pushed by master to slave during synchronization. SSH is used to connect to the slave.

- MySQL Multi-Master replication is setup for ‘irp’ database between master and slave nodes. Existing MySQL Multi-Master replication functionality is used.

- master IRP node is fully functional and collects statistics, queues for probing, probes and eventually makes Improvements. All the intermediate and final results are stored in MySQL and due to replication will make it into slave’s database as well.

- Bgpd works on both master and slave IRP nodes. They make the same announcements with different LocalPref/communities.

- Bgpd on slave node monitors the number of master announcements from the router (master announcements have higher priority than slave’s)

- Timers are used to prevent flapping of failover-failback.

Requirements #

- second server to install the slave,

- MySQL Multi-Master replication for the irp database.

- a second set of BGP sessions will be established,

- a second set of PBR IP addresses are required to assign to the slave node in order to perform probing,

- a second set of improvements will be announced to the router,

- a failover license for the slave node,

- Key-based SSH authentication from master to slave is required. It is used to synchronize IRP configuration from master to slave,

- MySQL Multi-Master replication of ‘irp’ database,

- IRP setup in Intrusive mode on master node.

Failover #

- master synchronizes its configuration to slave. This uses a SSH channel to sync configuration files from master to slave and process necessary services restart.

- MySQL Multi-Master replication is configured on relevant irp database tables so that the data is available immediately in case of emergency,

- components of IRP such as Core, Explorer, Irppushd are stopped or standing by on slave to prevent split-brain or duplicate probing and notifications,

- slave node runs Bgpd and makes exactly the same announcements with a lower BGP LocalPref and/or other communities thus replicating Improvements too.

Failback #

Recovery of failed node #