Speed is highly prioritized in networking. Applications with high bandwidth consumption and mission-critical systems demand ever more throughput and capacity. While the main demand comes from data-intensive sectors, such as: stockbroking, commodities trading, online gaming, video streaming, and VoIP, it’s hard to think of any business area that would not benefit from improved network performance.

Latency and throughput are the essential factors in network performance and they define the speed of a network. Whereas throughput is the quantity of data that can pass from source to destination in a specific time, latency is the time it takes for a single data transaction to occur, meaning the time it takes for the packet of data to travel to and from the destination back to the source.

Latency and user experience

Latency is one of the key ingredients for a good user experience. While the focus in user experience so far has been on maximum bitrates, after a certain level of throughput has been achieved, latency is sometimes even more important than the throughput, or bitrate, offered.

Why does latency matter? Many people know how frustrating it is to wait for the web page to load when you have a slow connection. Consumers are becoming increasingly active in the Internet, forming communities to discuss and compare network performance to improve their favorite online activities.

This raises the question of how long are end users willing to wait for a web page to load before abandoning the attempt. The end users´ tolerable waiting time (TWT) is getting shorter.

Studies suggest that feedback during the waiting time, for example percent-done indicators, encourages users to wait longer. The type of task, such as information retrieval, browsing, online purchasing or downloading also has an effect on a user’s level of tolerance. Users can become frustrated by slow websites, which are perceived to have lower credibility and quality while faster websites are thought to be more interesting and attractive.

People are becoming more “latency aware” and it is being increasingly evaluated and discussed. Fast response time matters. With low latency, the end user has a better experience of web browsing as web pages download quicker. Happy customers mean less churn and fast response time also means more revenue.

Less latency – more revenue

All services benefit from low latency. System latency is more important than the actual peak data rates for many IP based applications, such as VoIP, multimedia, e-mail synchronization and online gaming.

Real life examples from the Internet show that an increase in latency can significantly decrease revenues for an Internet service.

In a CNET article, Marissa Mayer, Vice President of Search Product and User Experience at Google mentioned that moving from a 10-result page loading in 0.4 seconds to a 30-result page loading in 0.9 seconds decreased traffic and ad revenues by 20%. It turned out that the cause was not just too many choices, but the fact that a page with 10 results was half a second faster than the page with 30 results. “Google consistently returns search results across many billions of Web pages and documents in fractions of a second. While this is impressive, is there really a difference between results that come back in 0.05 seconds and results that take 0.25 seconds? Actually, the answer is yes. Any increase in time it takes Google to return a search result, causes the number of search queries to fall. Even a very small difference in results’ speed impacts query volume.”

Experiments at Amazon have revealed similar results: every 100 ms increase in load time of Amazon.com decreased sales by one percent.

Also, tests at Microsoft on live search showed that when search results pages were slowed by one second:

Queries per user declined by 1.0%, and

Ad clicks per user declined by 1.5%

After slowing the search results page by two seconds:

Queries per user declined by 2.5%, and

Ad clicks per user declined by 4.4%

For service providers serving latency sensitive sectors such as gaming, online trading, video streaming, and VoIP, minimizing latency is a must for keeping their customers satisfied. There is no way a service provider can be competitive without properly addressing the latency issue.

Solution

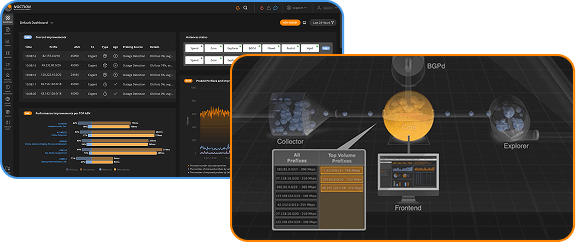

Congestion due to inappropriate routing is a major cause of latency. Therefore, early diagnosis and network routing optimization have a high impact over network’s latency. Traffic engineering solutions such as Noction Intelligent Routing Platform (IRP) lead to network optimization and congestion avoidance.

While high frequency trading, gaming, and VoIP continue to lead the demand for low latency, many applications and network services have also found themselves in need for latency reduction over the past several years. Thus, in addition to these markets, there is a growing list of industries where fast, predicable and fixed response time is a top business priority.